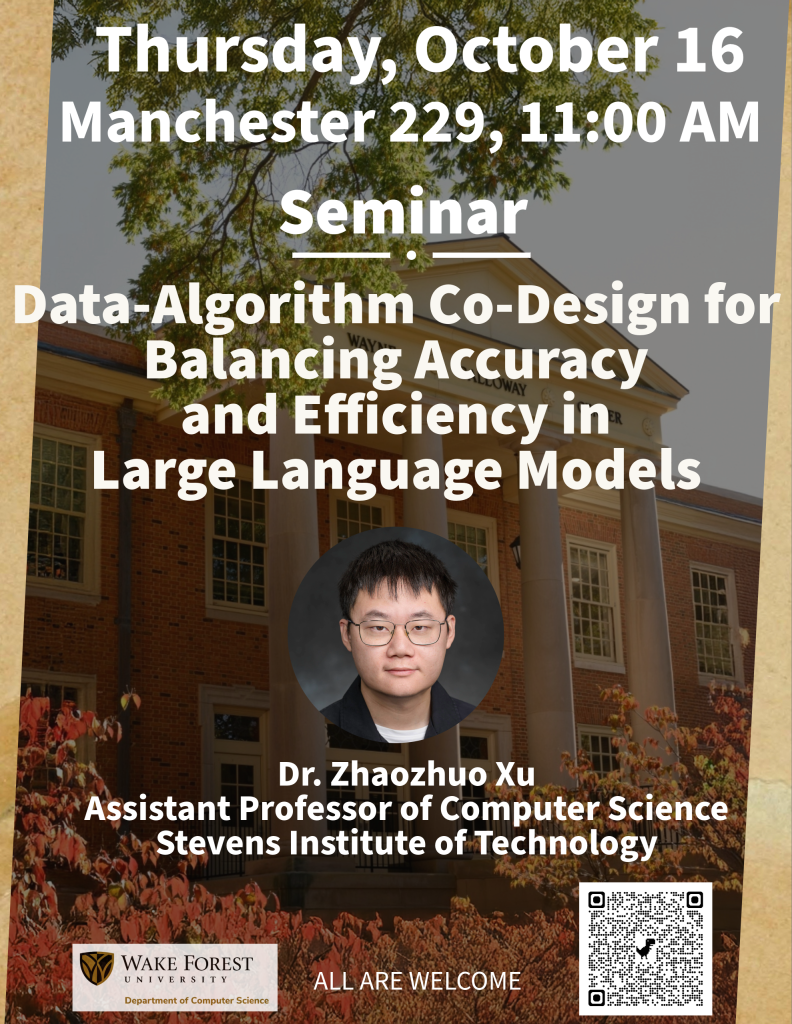

Data-Algorithm Co-Design for Balancing Accuracy and Efficiency in LargeLanguage Models by Dr. Zhaozhuo Xu

Abstract: As large language models (LLMs) grow in size, recentalgorithmic methods such as quantization, sparsification, and approximate gradient estimation aim to reduce memory and computation costs. However, these gains often come at the expense of accuracy. This talk introduces a data-algorithm co-design approach to address this trade-off by aligning training and calibration data with the assumptions of the algorithm.

We begin by highlighting the need to select data that matches the behavior of the algorithm. Two approaches, RapidIn and ALinFiK, are unified under the concept of linearized influence kernels, which estimate the impact of individual tokens on model outputs. RapidIn identifies influential tokens from past training data, while ALinFiK predicts which tokens will be most useful in future training. Together, they support efficient and targeted data selection.

After identifying the right data, we demonstrate how to rephrase it using another LLM to better align with the algorithm. This is shown through OAT Rephrase, which rewrites training data for improved zeroth-order fine-tuning, and Macaron, which rephrases calibration data to improve post-training quantization. Both preserve the original meaning while enhancing compatibility with the algorithm.

Finally, once the data is retrieved and rephrased, we use data-aware hyperparameter tuning to refine performance further. This end-to-end workflow involves retrieving relevant data, rephrasing it to match algorithm behavior, and tuning algorithm parameters. It enables efficiency improvements without compromising model quality.

Bio: Zhaozhuo Xu is an Assistant Professor of Computer Science at Stevens Institute of Technology. He earned his Ph.D. from Rice University and his M.S. from Stanford University. His research focuses on developing randomized algorithms to improve the efficiency and scalability of AI systems. Dr. Xu’s work has been published in premier venues such as NeurIPS, ICML, ICLR,OSDI, and ACL, as well as in Nature NP J Artificial Intelligence. His innovations in scalable AI have been integrated into widely used libraries like Hugging Face and adopted by several startups. He serves as an Associate Editor for Neurocomputing and as an Area Chair for leading conferences, including NeurIPS, ICLR, ICML, ACL, EMNLP, NAACL, and COLING. Dr. Xu has been recognized with the AAAI New Faculty Highlights (2025), the NSF CRII Award(2025), and the Stevens Bridging Award.

All are welcome!