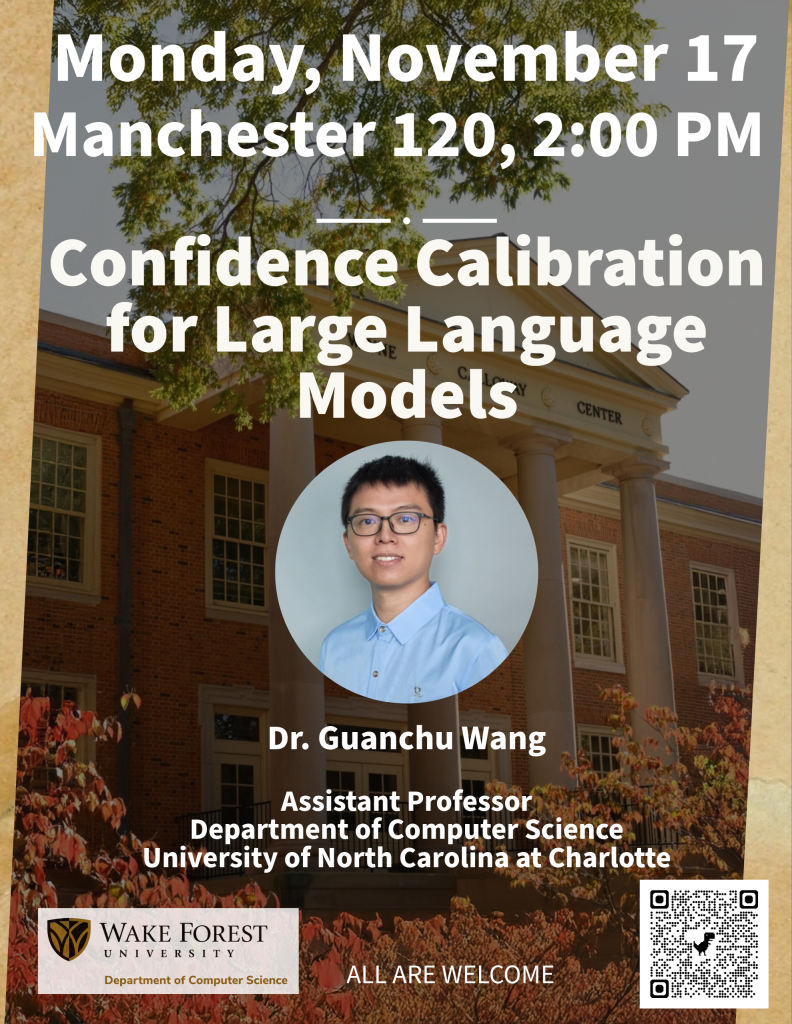

Confidence Calibration for Large Language Models by Dr. Guanchu Wang

Join us Monday, November 17 at 2:00 PM in Manc 120! Dr. Guanchu Wang will talk about his research titled Confidence Calibration for Large Language Models.

Abstract: Despite the widespread adoption of large language models (LLMs) in daily life, their reliability remains a major concern. One critical factor hurts their reliability is the miscalibration, the mismatch between a model’s confidence and its actual accuracy. In this talk, I will share our recent advance in LLM calibration for improving model reliability. Specifically, we identify two major sources of miscalibration: external decision space perturbations and internal representation perturbations. To address these challenges, we develop two complementary approaches: context calibration and representation calibration, for enhancing model reliability across different scenarios.

Biography: Guanchu (Gary) Wang is an Assistant Professor in the Department of Computer Science at University of North Carolina at Charlotte. His research focuses on enhancing the interpretability and reliability of Large Language models. His work has been published in top-tier AI venues, including NeurIPS, ICLR, and ICML. Notably, two of his papers received the CIKM’22Best Paper Awards and ICML’22 Spotlight recognition.

All are welcome!